Two ELSA Labs on 'Human-Centered AI for an Inclusive Society - Towards an Ecosystem of Trust'

NWO and the Dutch AI Coalition have launched the NWA Call ‘Human-centered AI for an inclusive-society- towards an ecosystem of trust’. Five projects have been awarded funding of more than 10 million euros in total to start Ethical, Legal and Societal Aspects (ELSA) Labs in the coming years. With the support of the City of the Hague, Security Delta (HSD) assisted in matchmaking, connecting relevant stakeholders to the consortia and the project prior to submission, e.g. by hosting several workshops for two of the five proposals. The HSD Office is very much looking forward to continuing its efforts and collaboration in both projects to contribute to the development and application of reliable, human-centered AI for Peace, Justice and Security.

The research within all projects is conducted within co-creative environments. These so-called ELSA Labs are the places where coherent research into technological, economic and societal challenges takes place. The research based on the NWA call 'Human-centered AI for an inclusive society' is intended to support the development of technological innovations that safeguard public values and fundamental rights, respect - where possible strengthen - human rights, and can count on social support. The research thus contributes to building an ecosystem of trust.

The projects have a duration of five to six years, and the projects will jointly submit a network project in June 2022. This network project aims to generalize the research results and scale up the solutions from the different ELSA Labs, generate a learning community and create a blueprint of an ELSA Lab. Initiators of the NWA call are the Ministries of Economic Affairs and Climate Change, Defense, Justice and Security, the Interior and Kingdom Relations, and Education, Culture and Science.

Read more on the ELSA lab concept here.

About ELSA Defence led by TNO (Dr. J. van Diggelen)

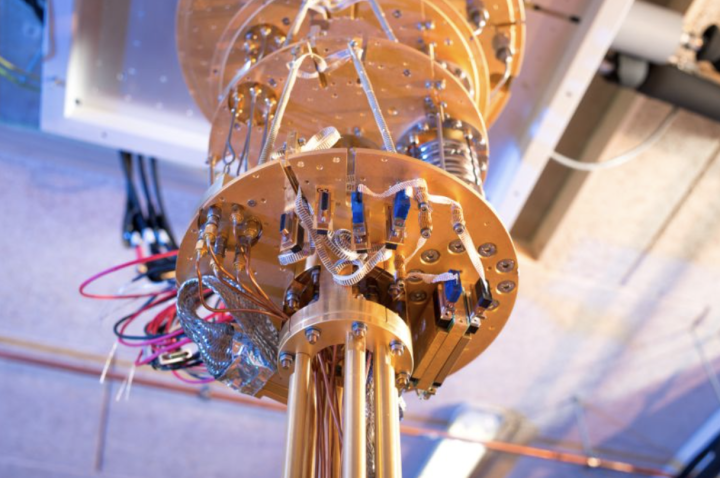

Artificial Intelligence (AI) plays an increasingly important role within the Dutch Ministry of Defence (MoD), e.g., for using unmanned autonomous systems, AI-assisted military decision making, autonomous logistics, and predictive maintenance. In addition to promoting the efficiency, effectiveness and safety of the Dutch armed forces, AI technology is required to cope with new challenges in peacekeeping and warfare, such as dealing with misinformation, adversary use of AI, and processing large amounts of (drone-borne) sensor data.

However, the introduction of AI-technology in defence raises a host of ethical, legal, and societal concerns, including how to keep AI-powered systems under meaningful human control, how to maintain human agency and dignity when giving autonomy to machines, and how to operate within legal boundaries. For responsible implementation, these and other aspects must be continuously addressed in the design and maintenance of AI-based systems, military doctrine, and training. How to do this is insufficiently understood. The question rises how the MoD can remain strategically competitive and at the edge of military innovation while respecting our values.

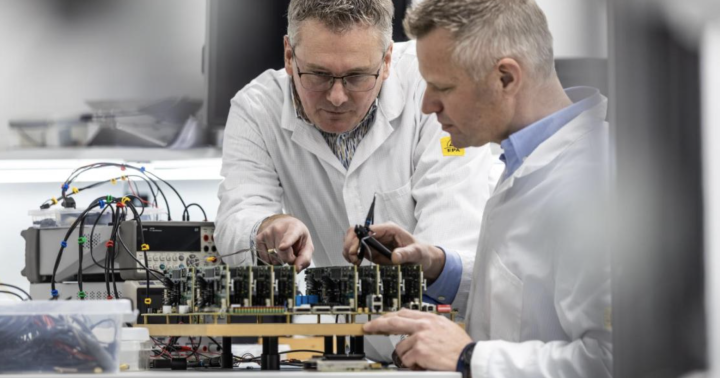

The ELSA Lab Defence addresses this question by developing a future-proof, independent and consultative ecosystem for the responsible use of AI in the defence domain. The lab will develop a methodology for context-dependent analysis, design and evaluation of ethical, legal and societal aspects of military AI-based applications. It builds upon existing methods for value-sensitive design, explainable algorithms and human-machine teaming. These methods are adapted to the specific defence context by conducting representative case studies, such as use of (semi-)autonomous robots, and AI-based methods against cognitive warfare. The lab also studies how society and defence personnel perceive the use of military AI, how this perception evolves over time, and how it changes in various contexts. Additionally, the ELSA Lab Defence monitors global technological, military, and societal developments that could influence perception.

About ‘AI for Multi-Agency Public Safety issues’ Lab led by the Erasmus Universiteit Rotterdam (Dr. G. Jacobs)

Public Safety is vital for the functioning of societies: Without safety, there is no freedom, no happiness, and no prosperity. The public good of safety matters to all of us and therefore needs to be jointly shaped and maintained by all societal partners. Data generated by multiple agents play an increasingly important role in preventing, preparing for and mitigating harm or disaster. The development of an ecosystem of trust regarding AI-assisted public safety promotion is central to this ELSA Lab application. In a variety of use cases, benefits and safeguards are analysed against the private-public-machine agency backdrop.

About Human-Centred AI

NWO and the Dutch AI Coalition have, as part of the Dutch Research Agenda, launched the programme ‘Artificial Intelligence: Human-centred AI for an inclusive society – towards an ecosystem of trust’. The programme facilitates the development and application of reliable, human-centred AI and is partly funded by the kick-start funds that were made available by the Ministry of Economic Affairs and Climate Policy.

In this public-private partnership, the government, industry, education and research institutions, and societal organisations, work together to accelerate national AI developments and to connect existing initiatives with each other. This NWA research programme connects AI as a key enabling technology with AI research for an inclusive society. In doing so, the national research agenda AIREA-NL as well as societal and policy issues play an important role.

Interested in the application of AI for Peace, Justice and Security? Register for the HSD Café on AI toepassingen voor Veiligheid, Vrede en Recht on 17th of February. Or read more on our AI (verwijzing naar nieuwe AI pagina) or Smart Secure Resilient Cities programmes.